3. NGINX Ingress Controller

The NGINX Ingress Controller is a popular open-source project that extends Kubernetes to manage and load balance application traffic within a cluster. It acts as an Ingress resource for Kubernetes, providing advanced features and capabilities for routing and managing HTTP, HTTPS, and TCP traffic to services in the cluster.

Here are some key features and functions of the NGINX Ingress Controller:

Ingress Resource Management : It extends Kubernetes’ Ingress resource by adding more features and flexibility for routing traffic to services based on hostnames, paths, and other criteria.

Load Balancing : The controller can distribute incoming traffic to different backend services based on rules defined in the Ingress resource. It supports round-robin, least connections, and IP hash load balancing methods.

SSL/TLS Termination : It can terminate SSL/TLS encryption for incoming traffic, enabling secure communication between clients and services.

Rewrites and Redirections : The controller allows you to define URL rewrites and redirections to customize the behavior of incoming requests.

Path-Based Routing : You can route traffic based on the URL path to different services, allowing for microservices architecture and application segregation.

Web Application Firewall (WAF) : It supports the integration of Web Application Firewall (WAF) solutions for enhanced security.

Custom Error Pages : You can define custom error pages for specific HTTP error codes.

TCP and UDP Load Balancing : Besides HTTP/HTTPS traffic, the controller can handle TCP and UDP traffic as well.

Authentication and Authorization : It can be configured to enforce authentication and authorization for incoming requests.

Custom Configurations : You can customize NGINX configurations to meet specific requirements by using ConfigMaps and Annotations.

Auto-Scaling : The controller can automatically scale the number of NGINX pods to handle increased traffic load.

Metrics and Monitoring : It provides metrics and logs for monitoring and troubleshooting.

When NGINX is deployed to your DigitalOcean Kubernetes (DOKS) cluster, a Load Balancer is created as well, through which it receives the outside traffic. Then, you will have a domain set up with A type records (hosts), which in turn point to your load balancer’s external IP. So, data flow goes like this: User Request -> Host.DOMAIN -> Load Balancer -> Ingress Controller (NGINX) -> Backend Applications (Services).

In a real world scenario, you do not want to use one Load Balancer per service, so you need a proxy inside the cluster, which is provided by the Ingress Controller. As with every Ingress Controller, NGINX allows you to define ingress objects. Each ingress object contains a set of rules that define how to route external traffic (HTTP requests) to your backend services. For example, you can have multiple hosts defined under a single domain, and then let NGINX take care of routing traffic to the correct host.

The NGINX Ingress Controller is deployed via Helm and can be managed the usual way.

To know more about the community maintained version of the NGINX Ingress Controller, check the official Kubernetes documentation.

Getting Started After Deploying NGINX Ingress Controller

Please refer to this document to connect your kubernetes cluster.

Once you have set up your cluster using kubectl, proceed with executing the following commands.

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

helm repo update

Confirming that NGINX Ingress Controller is running

First, check if the Helm installation was successful by running command below:

helm ls -n ingress-nginx

The Status column value should be deployed

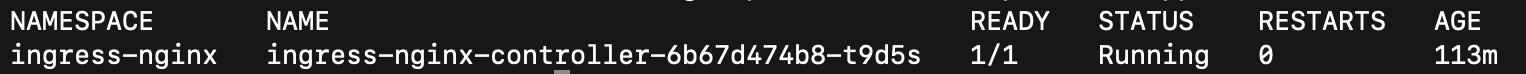

Next, verify if the NGINX Ingress Controller Pods are up and running:

kubectl get pods --all-namespaces -l app.kubernetes.io/name=ingress-nginx

Pods Should be in Ready State and Running Status

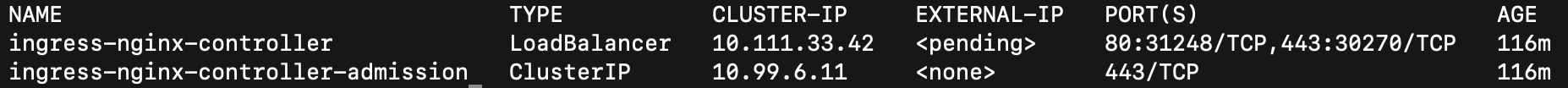

Finally, inspect the external IP address of your NGINX Ingress Controller Load Balancer by running below command:

kubectl get svc -n ingress-nginx

Check if, Valid IP is attached to the Service of Type LoadBalancer

If external-ip of service of type LoadBalancer is in pending status and you don’t have any available ip. Then attach ip to the cluster from LB IP POOL Tab.

To attach Ip to the cluster please refer this link: https://docs.e2enetworks.com/kubernetes/kubernetes.html#loadbalancer

To see how to get domain name attach to ip lease please refer this link: https://docs.e2enetworks.com/networking/dns.html

Tweaking Helm Values

The NGINX Ingress stack provides some custom values to start with. See the values file from the main GitHub repository for more information.

You can inspect all the available options, as well as the default values for the NGINX Ingress Helm chart by running the following command

helm show values ingress-nginx/ingress-nginx --version 4.7.2

After customizing the Helm values file (values.yml), you can apply the changes via helm upgrade command, as shown below:

helm upgrade ingress-nginx ingress-nginx/ingress-nginx --version 4.7.2 --namespace ingress-nginx

--values values.yml

Configuring NGINX Ingress Rules for Services

To expose backend applications (services) to the outside world, you specify the mapping between the hosts and services in your Ingress Controller. NGINX follows a simple pattern in which you define a set of rules. Each rule associates a host to a backend service via a corresponding path prefix.

Typical ingress resource for NGINX looks like below:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: service-a # name of your choice

spec:

ingressClassName: nginx

rules:

- host: ingress.my-example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: service-a # name of service you want to run

port:

number: 80 # value of port on your service

Explanations for the above configuration:

spec.rules : A list of host rules used to configure the Ingress. If unspecified, or no rule matches, all traffic is sent to the default backend.

spec.rules.host : Host is the fully qualified domain name of a network host (e.g.: ingress.my-example.com).

spec.rules.http: List of http selectors pointing to backends.

spec.rules.http.paths : A collection of paths that map requests to backends. In the above example the / path prefix is matched with the echo backend service, running on port 80.

The above ingress resource tells NGINX to route each HTTP request that is using the / prefix for the ingress.my-example.com host, to the echo backend service running on port 80. In other words, every time you make a call to http://ingress.my-example.com/ the request and reply will be served by the echo backend service running on port 80

If you want to make a request on https://ingress.my-example.com/ then you must need a tls/ssl certificate. Typical NGINX Ingress Rule for such case is:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: service-a # name of your choice

spec:

ingressClassName: nginx

rules:

- host: ingress.my-example.com

http:

paths:

- path:/

pathType: Prefix

backend:

service:

name: service-a # name of service you want to run

port:

number: 80

tls:

- hosts:

- ingress.my-example.com

secretName: service-a-secret

Explanations for the above configuration:

spec.tls : A collection of list of hosts and secretName.

NOTE : SecretName is the same secret you have created while creating certificate using cert-manager.

Please refer to the cert-manager documentation for further information. https://docs.e2enetworks.com/kubernetes/kubernetes_marketplace.html#cert-manager

For further information you can follow ingress documentation.

Upgrading the NGINX Ingress Chart

You can check what versions are available to upgrade, by navigating to the ingress-nginx official releases page on GitHub. Alternatively, you can also use ArtifactHUB.

Then, to upgrade the stack to a newer version, run the following command

helm upgrade ingress-nginx ingress-nginx/ingress-nginx --version

<INGRESS_NGINX_STACK_NEW_VERSION> --namespace ingress-nginx --values

<YOUR_HELM_VALUES_FILE>

See helm upgrade for more information about the command.

Upgrading With Zero Downtime in Production

By default, the ingress-nginx controller has service interruptions whenever it’s pods are restarted or redeployed. In order to fix that, see this blog post by Lindsay Landry from Codecademy

Uninstalling the NGINX Ingress Controller

To delete your installation of NGINX Ingress Controller, run the following command:

helm uninstall ingress-nginx -n ingress-nginx

NOTE: The command will delete all the associated Kubernetes resources installed by the ingress-nginx Helm chart, except the namespace itself. To delete the ingress-nginx namespace as well, please run below command:

kubectl delete ns ingress-nginx