Fine-tune Stable Diffusion model on TIR

In this tutorial, we will fine-tune Stability AI’s Stable Diffusion (v2.1) model via Dreambooth & Textual Inversion training methods using Hugging Face Diffusers library. By using just 3-5 images, we will be able to teach new concepts to Stable Diffusion and personalize the model on our own images.

About Training methods

The Stable Diffusion model can be fine-tuned via any of the two training methods, i.e., Dreambooth or Textual Inversion. So, before tarining the model, let us get a brief overview of the two training methods.

Dreambooth

DreamBooth is a way to fine tune Stable Diffusion to generate a subject in different environments and styles. It teaches the diffusion model about a specific object or style using approximately three to five example images. After the model is fine-tuned on a specific object, it can produce images containing that object in new settings. You can read the original paper here. Here’s how it works:

You provide a set of reference images to teach the model how the subject looks

You re-train the model to learn to associate that subject with a specific word

You prompt the new model using the special word

With DreamBooth, we’re training an entirely new version of Stable Diffusion. It will be an entirely self-sufficient version of a model that can yield pretty good results to the cost of bigger models.

Textual Inversion

The Textual Inversion training method captures new concepts from a small number of example images and associates the concepts with new words in the textual embedding space of the pre-trained model. Using only 3-5 images of a user-provided concept, like an object or a style, we learn to represent it through new “words” in the textual embedding space. These “words” can be used into natural language sentences in the form of text prompts, guiding personalized image creation in an intuitive way. You can read the original paper here. Here’s how it works:

You provide a set of reference images of your subject

You figure out what specific embedding the model associates with those images

You prompt the new model using the embedding

Textual Inversion is basically akin to finding a “special word” that gets your model to produce the images that you want.

Fine tuning the model

Step-1: Launch a Notebook on TIR

Before beginning, let us launch a Notebook on TIR to train our model. To launch the notebook follow the steps below:

Go to TIR Dashboard

Choose a Project, navigate to the Notebooks section and click on Create Notebook button

Let us name the notebook as stable-diffusion-fine-tune. You can give any name of your choice.

Notebook Image & Machine: Launch a new Notebook with Diffusers Image and a GPU Machine plan. To fine-tune the model, we recommend choosing a GPU plan from one of A100, A40 or A30 series (e.g.: GDC3.A10080-16.115GB)

Disk Size: A default Disk Size of 30GB would be sufficient for our use-case. In case you need more space, set the disk size accordingly.

Datasets: If you have your training data stored in a TIR Dataset, select that dataset to be able to use it during fine tuning.

Create the notebook

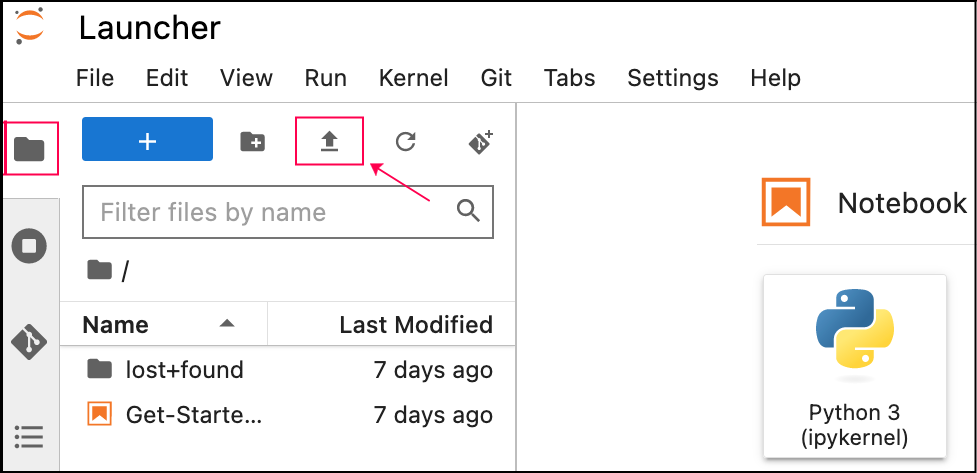

Launch Notebook: Once the notebook comes to Running state, launch the notebook by clicking on the three dots(

...) and start the start jupyter labs environment. (The sidebar on the left also displayes quick launch links for your notebooks.)In the jupyter labs, create a new Python3 Notebook from the New Launcher window. A new .ipynb file will open in jupyter labs.

Our notebook is ready. Now, let’s proceed with the model training.

Note

This tutorial covers both DreamBooth and Textual Inversion training methods. Most of the steps and code are the same for both the methods. But, when they are different, we have used tabs to separate the two training methods.

Step-2: Initial Setup

Install the required libraries

Import the required libraries and packages

Now, let’s define some helper functions that we will need gradually during the training and inference process.

Step-3: Settings for teaching the new concept

3.1. Defining the Model

Specify the Stable Diffusion model that we want to train. For this tutorial, we will use Stable Diffusion v2.1 (stabilityai/stable-diffusion-2-1)

3.2. Input Images:

To teach the model a new concept, we need 3-5 input images with the help of which we will train the model. So let’s gather and setup the images for training purpose.

There can be multiple sources for the input images. Setup the input images using any one of the three sources:

Images available on the Internet

Using the public image urls, we can download the images from the internet and save them locally on Notebook.

# Add the URLs to the images of the concept you are adding. 3-5 should be fine urls = [ "https://huggingface.co/datasets/valhalla/images/resolve/main/2.jpeg", "https://huggingface.co/datasets/valhalla/images/resolve/main/3.jpeg", "https://huggingface.co/datasets/valhalla/images/resolve/main/5.jpeg", "https://huggingface.co/datasets/valhalla/images/resolve/main/6.jpeg", ## You can add additional image urls here ] # Download the images from urls import requests import glob from io import BytesIO def download_image(url): try: response = requests.get(url) except: return None return Image.open(BytesIO(response.content)).convert("RGB") images = list(filter(None, [download_image(url) for url in urls])) # save the images in a directory save_path = "./my_concept" if not os.path.exists(save_path): os.mkdir(save_path) [image.save(f"{save_path}/{i}.jpeg") for i, image in enumerate(images)]Images stored in a TIR Dataset

To use the images stored in a TIR Dataset, ensure that the particular dataset containing your images is mounted on the notebook.

Note

How to check if your Dataset is mounted on the notebook ?

Go to TIR Dashboard >> Notebooks Section >> Select the notebook >> Go to the Associated Datsets Tab

You will be able to see the list of Datasets mounted on your notebook. If your desired dataset is not in the list, you can mount it now.You can also open the terminal and list all the mounted datasets using the

ls /datasets/command.If you have updated the mounted dataset, wait for your notebook to be back in Running state, and start afresh from Step-2.

P.S.: Dataset and Notebook must be in the same project, else you cannot mount it on the notebook.

Specify your dataset name and the directory path conatining the images in the below code block and run the code cell.

dataset_name = "" # enter the dataset name images_dir = "" # enter path to directory containing the training images images_path = os.path.join("/datasets/", str(dataset_name), str(images_dir)) # "/datasets/{dataset_name}/{images_path}" if not dataset_name: print("dataset_name must be provided") elif not os.path.exists(str(images_path)): print('The images_path specified does not exist. Check the path and try again') else: save_path = images_pathImages present on your local system

You can load your own training images by uploading them to the notebook using the Upload Files option.

# `images_path` is a path to directory containing the training images. images_path = "" # type: "string" if not os.path.exists(str(images_path)): print('The images_path specified does not exist. Check the path and try again') else: save_path = images_path

Note

Make sure that your input image directory only contains input images as the code will read all the files from the provided directory, and it will interfere with the training process.

Before proceeding further, let us first check our training images.

3.3. Settings for the new concept

Step-4: Teach the model the new concept (Fine-tuning with the training method)

Execute the below sequence of cells to run the training process. The whole process may take from 30 min to 3 hours. (Open this block if you are interested in how this process works under the hood or if you want to change advanced training settings or hyperparameters)

4.1. Setup for Training

4.2. Training

Setting up the training args and define hyperparameters for the training.

You can also tune the hyperparameters like learning_rate, max_train_steps etc. to play around.

Define the training function

4.3. Run the Training

Step-5: Run Inference with the newly trained Model

Bravo! Our model training is complete and we have taught our model a new concept, namely, cat-toy.

Let us now test our newly trained model by running inference against it and see the results.

5.1. Set up the pipeline

The newly trained model artifacts are available in the output directory specified while setting up the training args. We will use those artifacts to load the model and setup the pipeline.

5.2. Run the Stable Diffusion pipeline

Step-6: Save the newly created concept

Our model training is complete and we have also tested it by successfully generating some image samples. We shall now save the model artifacts to preserve our newly created concept. This will also enable us to launch an Inference server against it, as described in the next section.

6.1. Save the Model artifacts

6.2. Create Model on TIR

Go to TIR Dashboard

Choose a project, navigate to the Models section and click on Create Model

Enter a model name of your choosing (e.g. stable-diffusion)

Select Model Type as Custom & click on CREATE

You will now see details of EOS (E2E Object Storage) bucket created for this model.

EOS Provides a S3 compatible API to upload or download content. We will be using MinIO CLI in this tutorial.

Copy the Setup Host command from Setup MinIO CLI tab. We will use it to setup MinIO CLI in the next step.

Note

In case you forget to copy the setup host command for MinIO CLI, don’t worry. You can always go back to model details and get it again.

6.3. Upload the Artifacts to Model Bucket

We have already created the model on TIR. Let’s set it up on our notebook and upload the artifacts to the model.

Let’s again go back to our TIR Notebook named stable-diffusion-fine-tune, that we used to fine-tune the model in the previous steps and follow the steps below:

In the jupyter labs, click New Launcher and select Terminal

Setup the MinIO CLI by running the Setup Host command copied in Step-6.2

Go to the directory

model_artifacts_dir, where we saved the model artifacts in step-6.1 using the below command.- Copy the model artifacts to the model bucket.

Go to TIR Dashboard >> Models >> Select your model >> Copy the cp command from Setup MinIO CLI tab

The copy command would look like this:

mc cp -r <MODEL_NAME> stable-diffusion/stable-diffusion-854588Here we will replace <MODEL_NAME> with ‘*’ to upload all contents of the current folder to the bucket

We will append

$model_artifacts_dir/to the bucket path to upload the contents to a folder named$model_artifacts_dirinside the bucket, so that, your copy command would look something like this:

Your model artifacts will be saved successfully upon upload completion.

Step-7: Create Inference Server against our newly trained model

What now remains is to create an Inference Server against our trained model and serve API requests.

Note

If you are not much familiar with Inference creation on TIR, follow this tutorial for a detailed and step-by-step guide on Model Endpoint (Inference) Creation for Stable Diffusion.

7.1. Create a new Model Endpoint in TIR

Create a new Model Endpoint in TIR with Stable Diffusion Framework and a GPU Machine Plan.

[Important!] In the Model Details Subsection, choose the Model that we created in Step-6.2. Make sure to specify the

$model_artifacts_dirpath. This is necessary, because our trained model artifacts are present in this directory.

7.2. Generate your API Token

The model endpoint API requires a valid auth token which you’ll need to perform further steps. So, generate a new API Token from the API Tokens Section (or use an existing token, if already created). An api-key and an auth token will be generated. Copy this auth token. You will need it in the next step.

7.3. Make API Request to generate Image output

The final step is to send API requests to the created model endpoint & generate images using text prompts. We will use TIR Notebook to do the same.

Once your model is Ready, visit the Sample API Request section of that model and copy the Python code

Launch a TIR Notebook with PyTorch or StableDiffusion Image with any basic machine plan. Once it is in Running state, launch it, and start a new notebook untitled.ipynb in the jupyter labs

Paste the Sample API Request code (for Python) in the notebook cell

Copy the Auth Token generated in Step-7.2 & use it in place of $AUTH_TOKEN in the Sample API Request

Replace the prompt string in the payload with the below prompt. This ensures the use of placeholder token in the prompt (in our case,

<cat-toy>) to get the desired output."a <cat-toy> in mad max fury road"Execute the code and send request. You’ll get a list of tensors as output.

This is because Stable Diffusion v2.1 model endpoint return the generated images as a list of PyTorch Tensors.To view the generated images, copy the below code, paste it in the notebook cell and execute it. You’ll be able to view the generated images.

import torch import torchvision.transforms as transforms def display_images(tensor_image_data_list): '''convert PyTorch Tensors to PIL Image''' for tensor_data in tensor_image_data_list: tensor_image = torch.tensor(tensor_data.get("data")) # initialise the tensor pil_img = transforms.ToPILImage()(tensor_image) # convert to PIL Image pil_img.show() # to save the generated_images, uncomment the line below # image.save(tensor_data.get("name")) if response.status_code == 200: display_images(response.json().get("predictions"))Output:

That’s it! We have successfully taught our model a new concept, <cat-toy>.

The Stable Diffusion model supports various other parameters for controlling the generation of image output. Refer to Supported parameters for image generation for more details.

Conclusion

Through this tutorial, we fine-tuned the Stable Diffusion v2.1 model using two training methods, namely, Dreambooth and Textual Inversion. By giving just 3-5 images as input, we taught new concept to the model and could personalise the model on our own images.

After fine-tuning, we also saw how we can store the trained model artifacts to TIR Model Storage & launch Inference Servers using the same to serve API Requests.