Datasets

You can organize, share and easily access your data through notebooks, training code using datasets. At the moment, TIR supports EOS (Object Storage) backed datasets but we will soon introduce PVC (Disk) backed datasets as well.

How does it work?

The datasets allows you to mount and access EOS storage buckets as local file system. This will enable you to read objects in your bucket using standard file system semantics.

With datasets, you can load training data from local machine, other cloud providers and access the data from your notebook as a mounted file system (under /datasets directory).

Note

Define datasets to manage and control your data, even if you don’t plan to use it as mount it for use on notebooks or training jobs.

Benefits

Using datasets offers the following benefits:

You can create a shared EOS bucket for training data for your team. By using common data sources across team, you can improve reproducibility of results

Less configuration overhead. When you create dataset through TIR, we automatically create a new storage bucket and access credentials. You can straight away copy and run the mc (minio cli) commands (shown in the ui) to your local desktop or hosted notebooks to upload data.

You can access your training data without having to setup access information on hosted notebooks or training jobs

You can stream training data instead of downloading all of it to the disk. This is also useful in distributed training jobs.

Usage

WebUI

You can define dataset through TIR dashboard. The UI also allows you to browse the objects in the bucket and upload files. We recommend using mc client or any s3 compliant cli to upload large data. In case you have data with other cloud providers, visit the Import Data from Other Cloud Providers section.

SDK

Using TIR SDK, you can quickly setup mc cli and start importing or exporting data from EOS buckets.

Getting Started

Prequisites

Install minio cli on your desktop (local) from here. Ignore this step, if you already have mc installed on your local machine.

Create a new dataset

Go to the TIR dashboard

Make sure you are on the right project or feel free to create a new project

Go to Datasets tab

Click CREATE DATASET

Choose a bucket type New EOS Bucket. This will create a new EOS bucket tied to your account and also access keys for it.

Enter a name for your dataset (for e.g. paws)

Click on CREATE

You will see a popup with Bucket name, Access Key and Secret Key

Locate and go to Setup Minio CLI (mc) tab (in the popup) and copy the mc command to setup host

Run the copied mc command in the command line on your local desktop. This will setup mc alias that you transfer data to.

Copy data into Dataset

On your local desktop, open command line and enter the following command:

mc alias ls <dataset-name>

You should see the eos bucket names in response. Copy this bucket name to edit and run the following copy command:

mc cp --recursive <mylocaldirectory> <dataset-name>/<eos-bucket>

Working with Datasets

Import Data from local machine

To import data from your local machine, TIR provides two options:

Upload from browser (TIR Dashboard >> Datasets >> Click on Dataset >> Objects Browser tab)

- Use CLI (s3 compliant like mc):

Install minio cli on your desktop (local) from here.

In TIR Dashboard, locate and click your dataset in Datasets section. At the bottom of the screen, you will find setup tabs. Choose Minio (mc) tab and to copy setup instructions for alias.

mc alias set <dataset-name> <eos-bucket> <access key> <secret key>After setting up alias, you can upload complete directory using command below:

mc cp --recursive <source_dir> <dataset-name>/<eos-bucket>/

Import Data from Amazon S3

After configuring alias for your dataset and aws s3 in mc (minio cli), run the following command:

mc cp --recursive <s3-alias>/<s3-bucket> <dataset-name>/<eos-bucket>/ --attr Cache-Control=max-age=90000, min-fresh=9000\;key1=value1\;key2=value2

To configure dataset alias for EOS bucket, follow instructions in Import Data from local machine

Import Data from GCS

After configuring alias for your dataset and aws s3 in mc (minio cli), run the following command:

mc cp --recursive <s3-alias>/<s3-bucket> <dataset-name>/<eos-bucket>/ --attr Cache-Control=max-age=90000, min-fresh=9000\;key1=value1\;key2=value2

To configure dataset alias for EOS bucket, follow instructions in Import Data from local machine

Upload data from TIR Jupyter Notebook

You will need to configure dataset alias in mc to upload data to EOS bucket. You can achive this in two ways:

From TIR Dashboard, you can find mc alias` and mc cp commands for respective dataset. Use the commands to upload dataset from notebook cells.

Using our python SDK, you can configure mc alias without having to go to TIR Dashboard. Run the following steps from notebook cells:

from e2enetworks.cloud.tir

tir.init()

tir.set_dataset_alias("dataset-name")

To upload or download data to the notebook instance:

# from the output copy the eos bucket name and use it in cp command

!mc ls <dataset-name>/

!mc cp --recursive ~/data/ <dataset-name>/<eos-bucket>/

Permenantly Migrate from other Cloud

If you have a data science workflow or an application that uploads data to other cloud storage, you can replace the endpoints to EOS and get best performance on your training jobs.

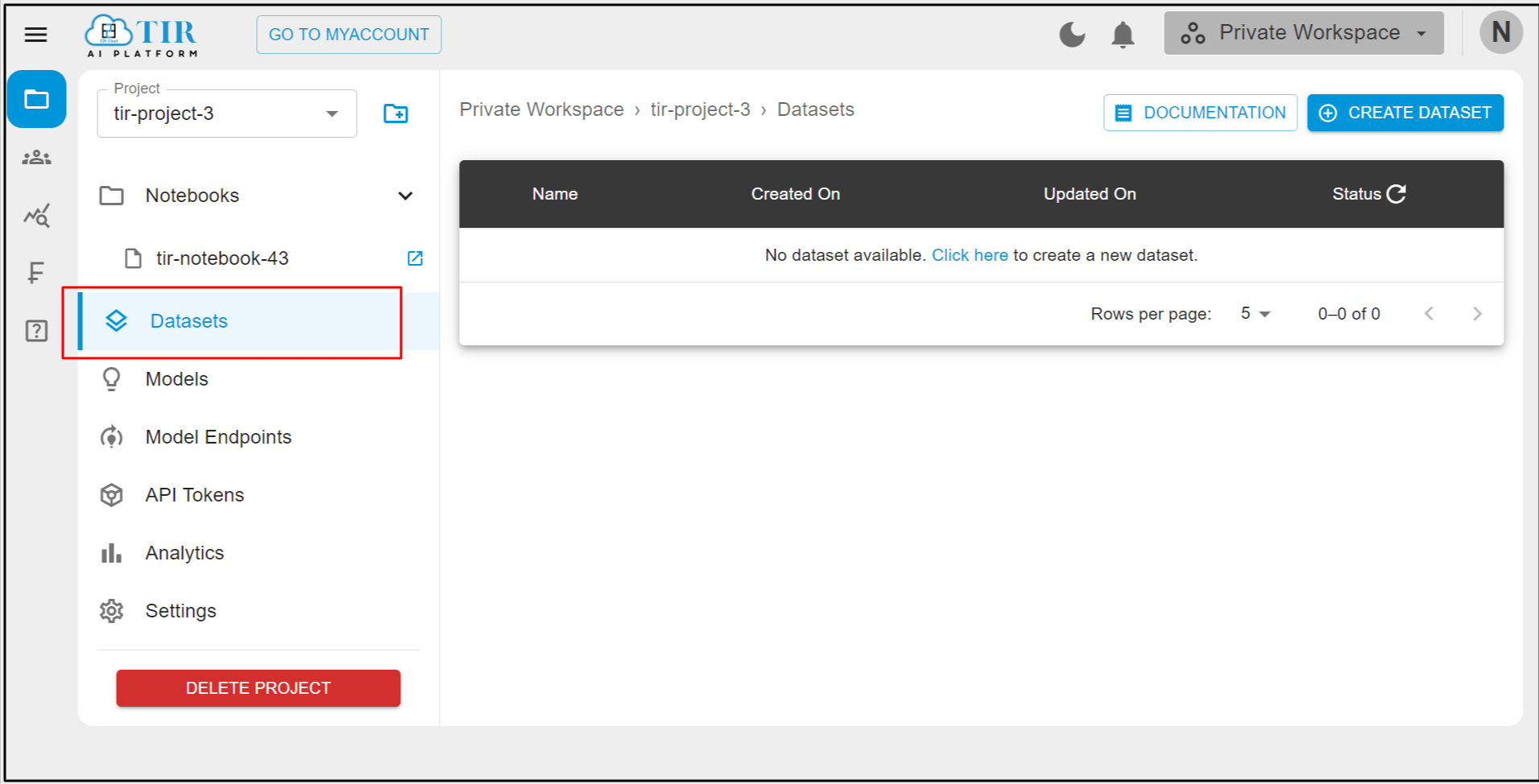

How to Create Datasets ?

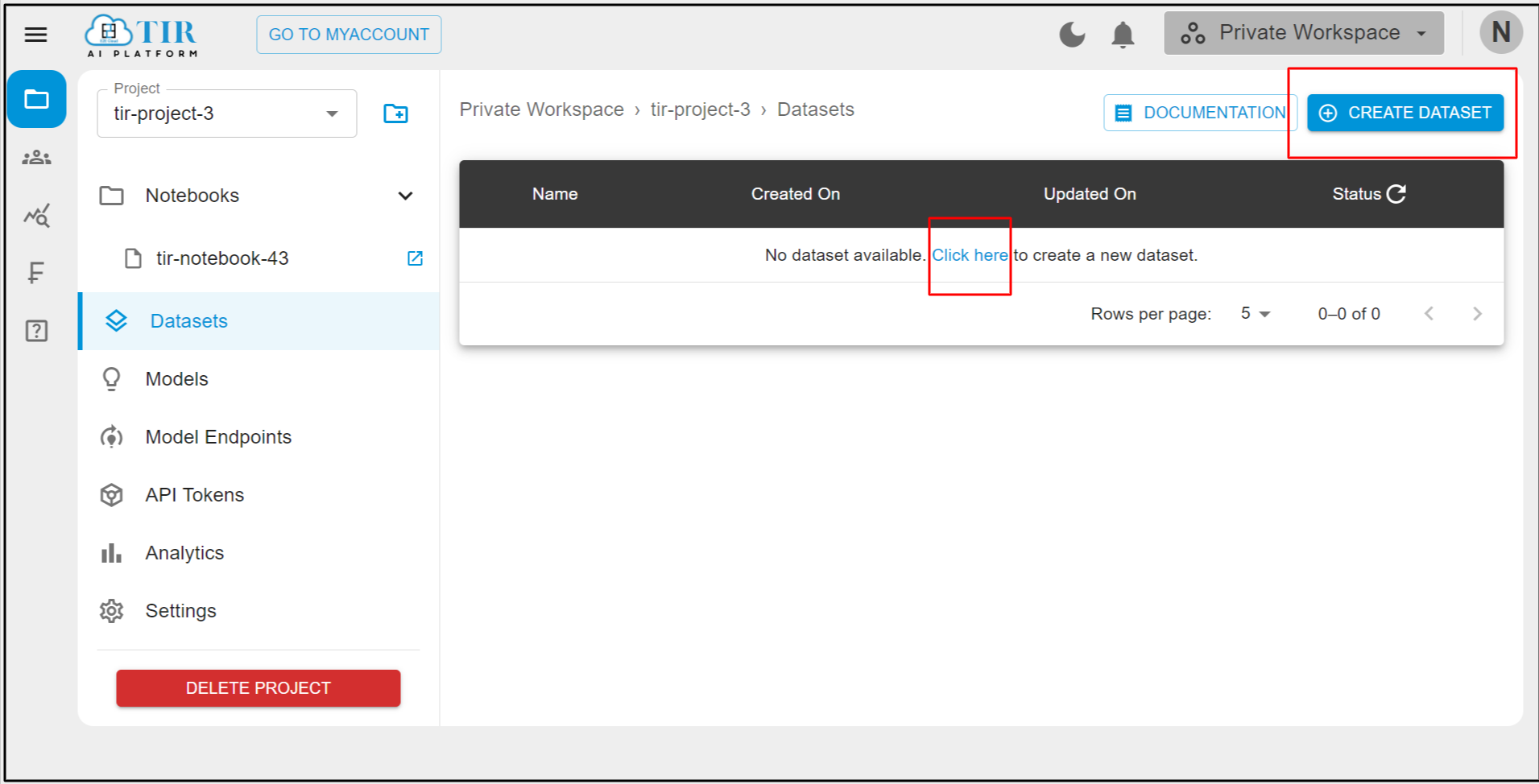

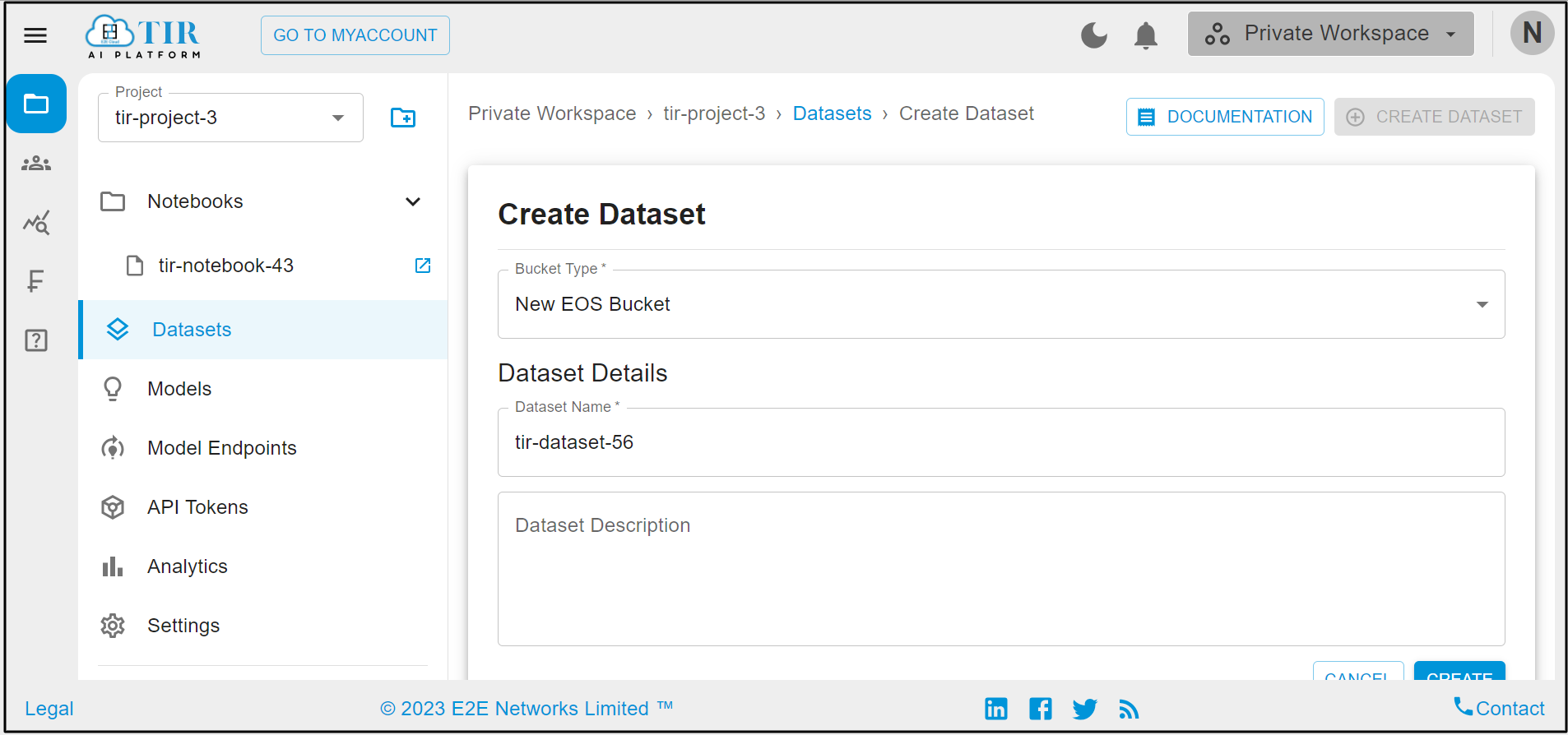

For creating the Dataset you have to click on Dataset button from side nav bar.

Clicking on the Create Dataset button.

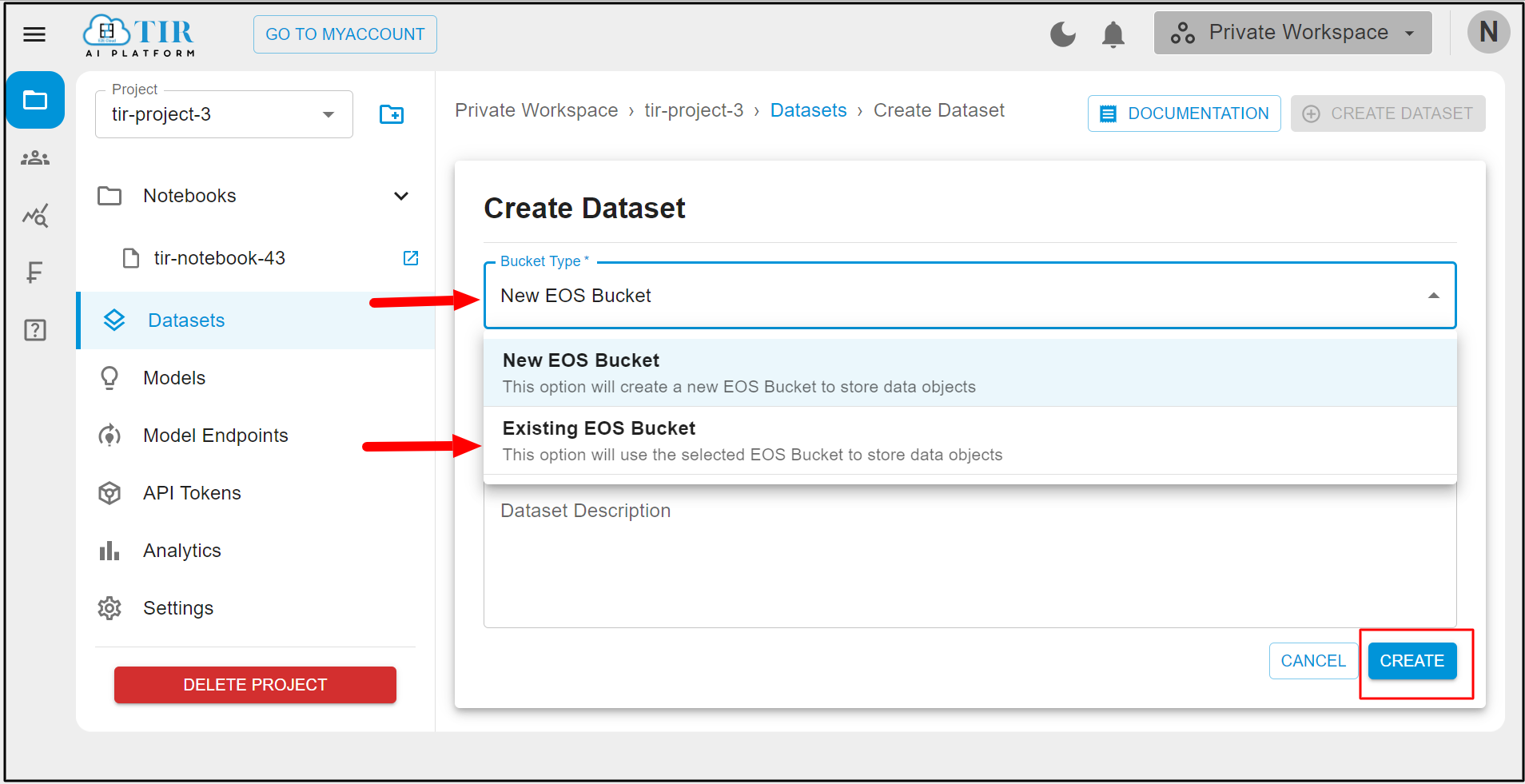

After clicking the Create Dataset button the Dataset creation form will be open and you have to select bucket type either you want to create a new bucket or select an existing bucket. Here we are giving the example with the new EOS bucket. So enter the database details and click on the Create button.

Create a Dataset with an existing bucket. For that you have to simply select the Existing EOS bucket from the dropdown and enter all details and click on Create button.

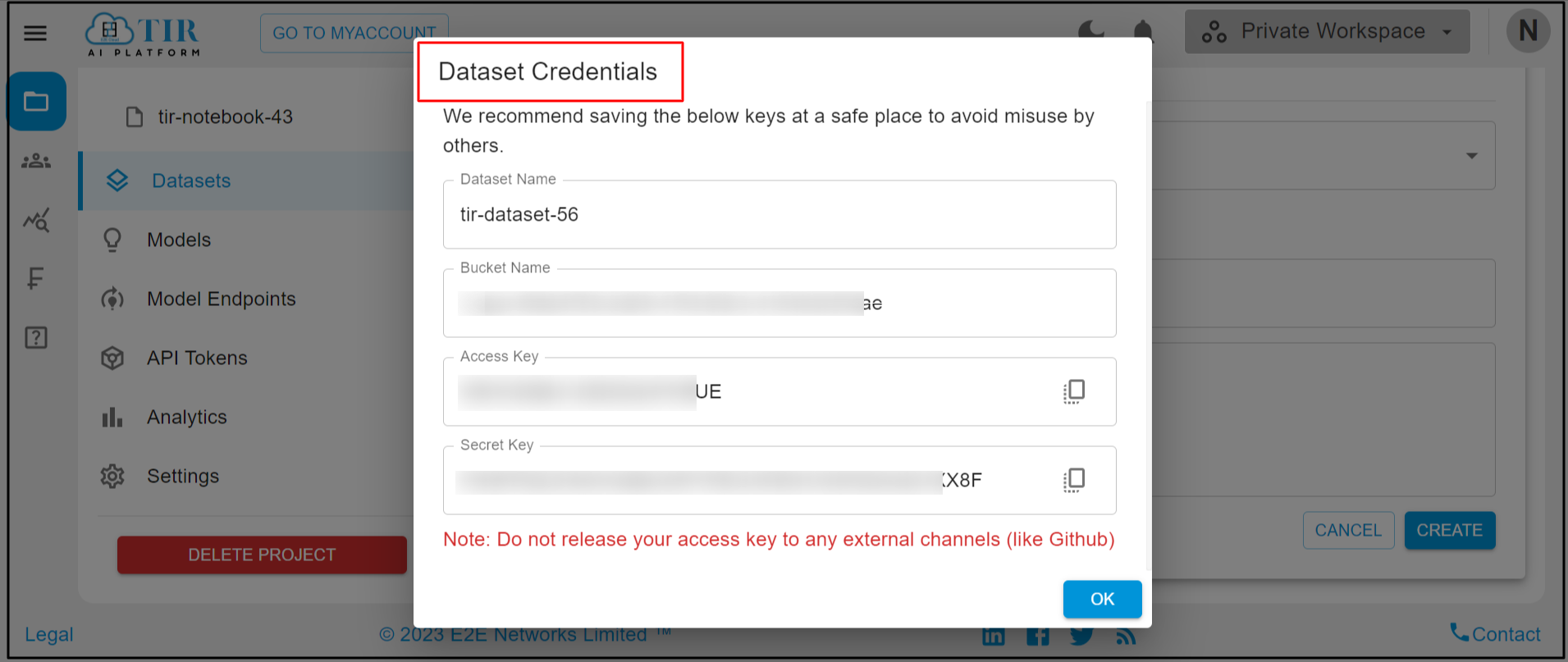

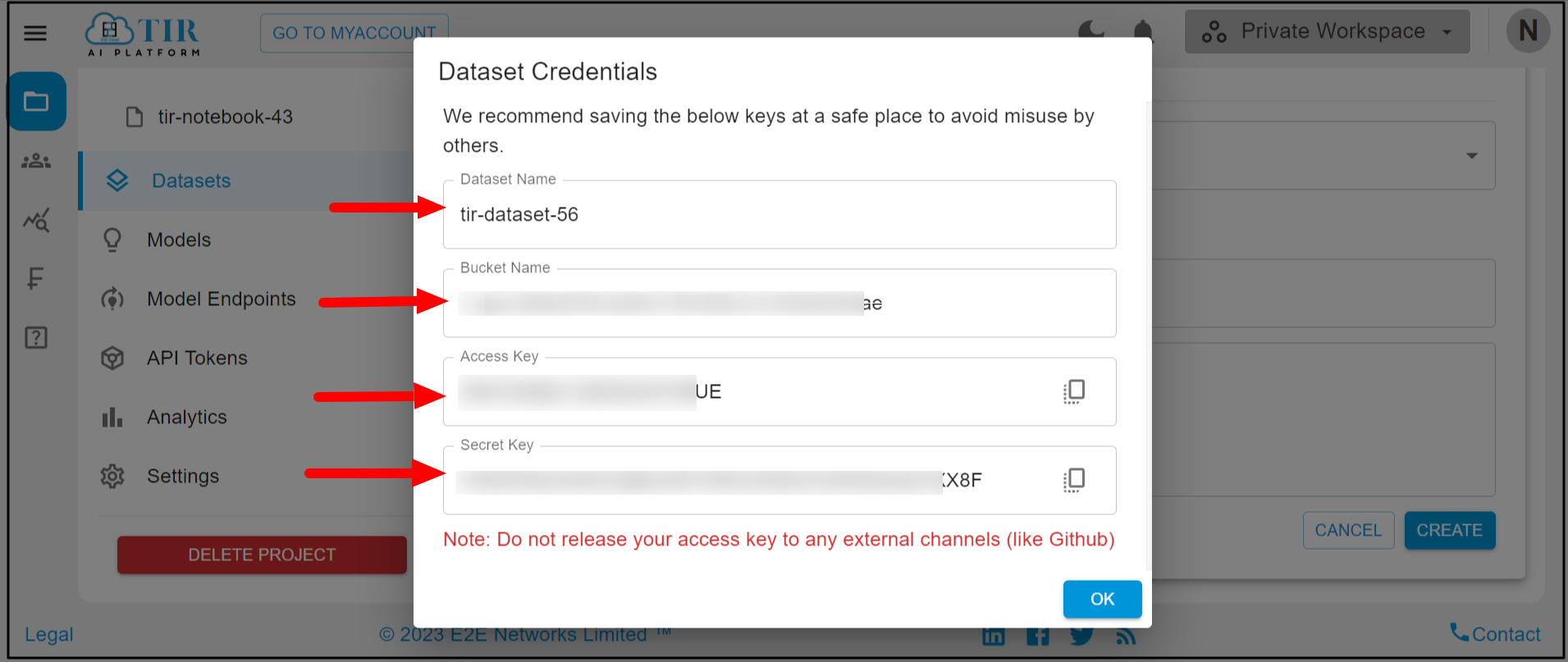

After clicking on the Create button a pop up will appear in which the Dataset Credentials will be shown.

It will show new dialog box Dataset Credentials with dataset name, Bucket Name, Access Key, Secret Key. After creating the dataset it will be shown like this.And you have to click on the Ok button to continue.

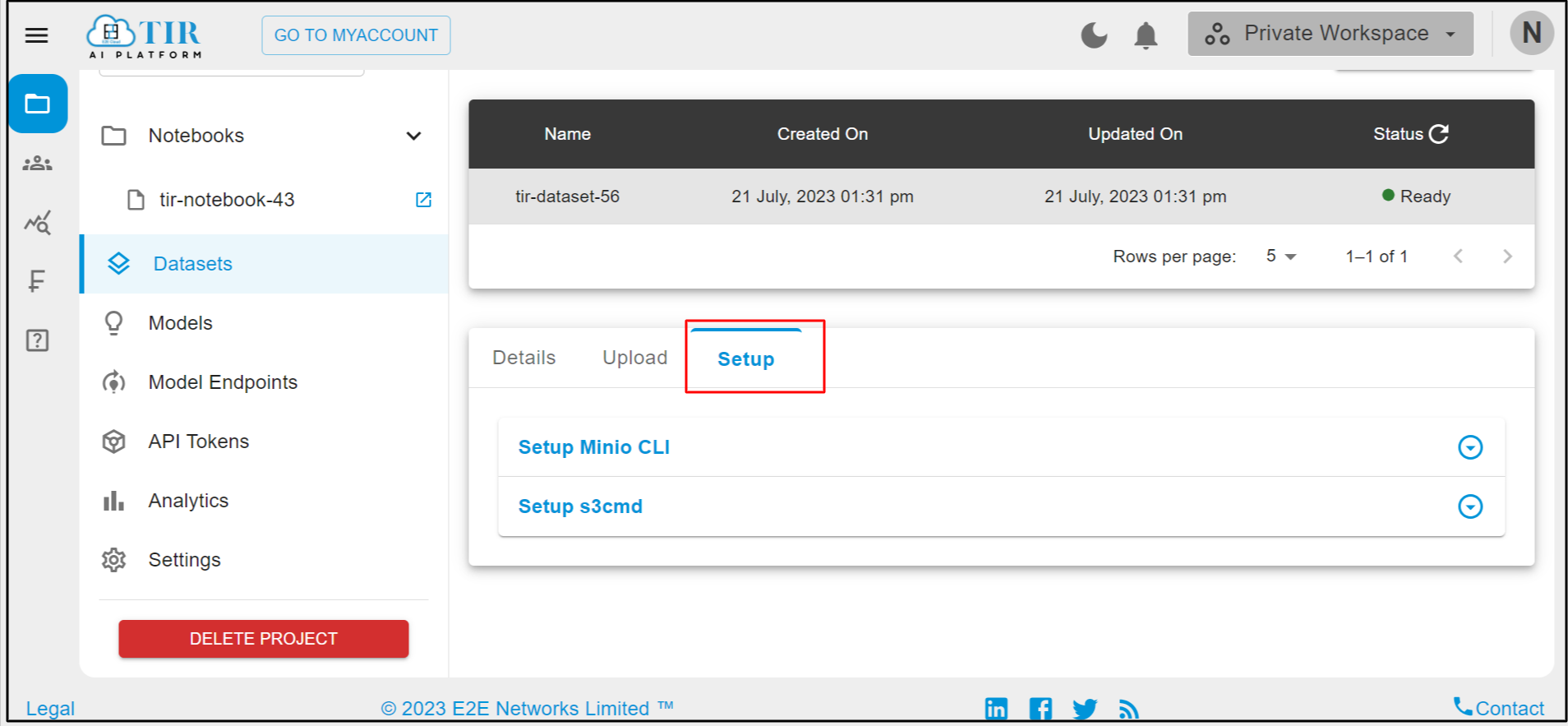

After clicking on the OK button it should show Details, Upload & Setup page.

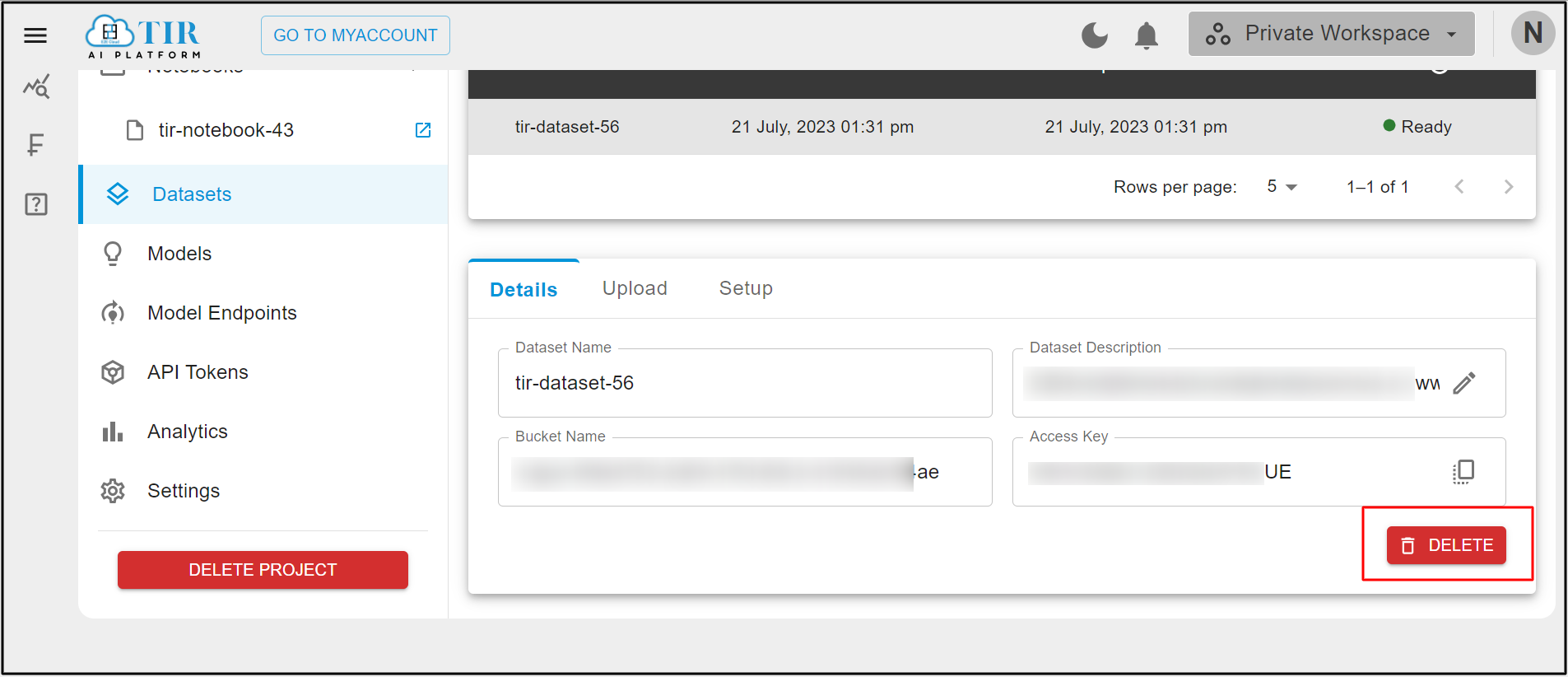

Delete DataSet

Click on the Delete button to delete the dataset.

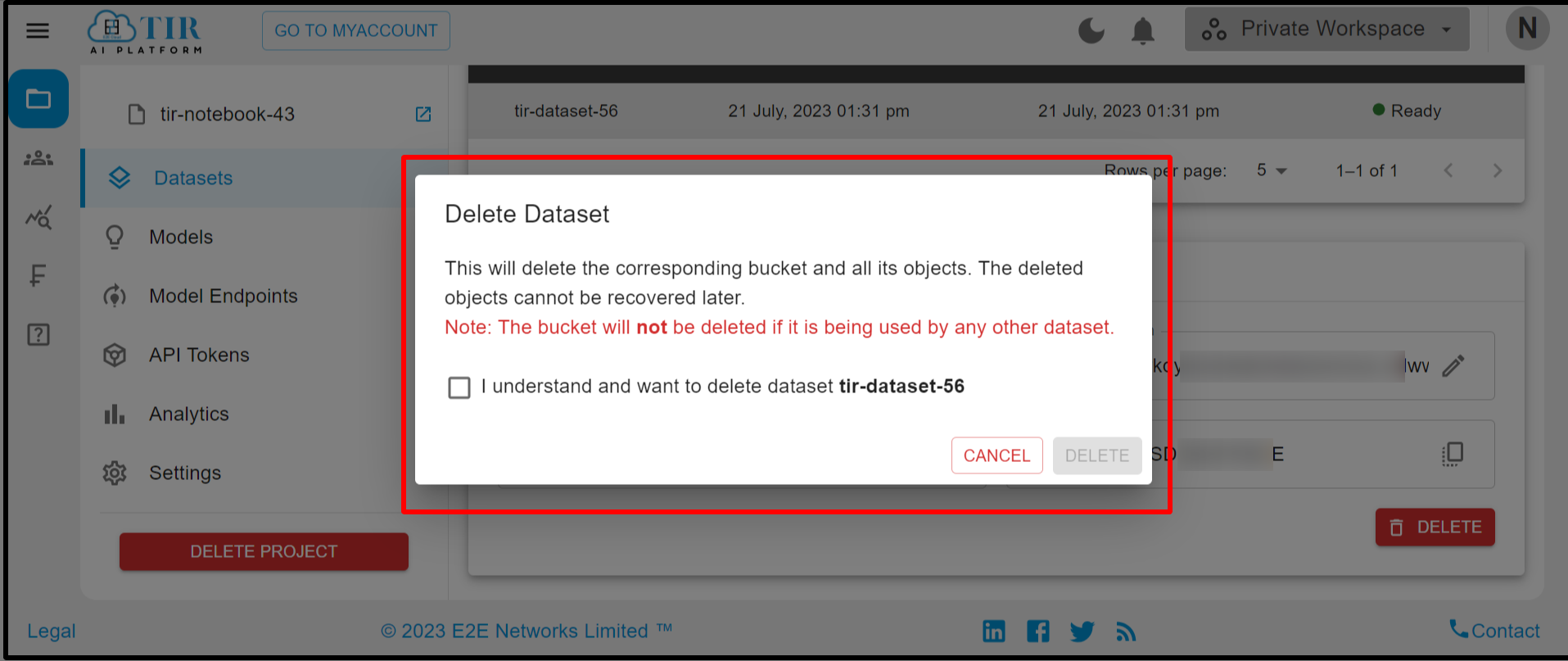

After clicking on the Delete button it will show one popup to delete the dataset.

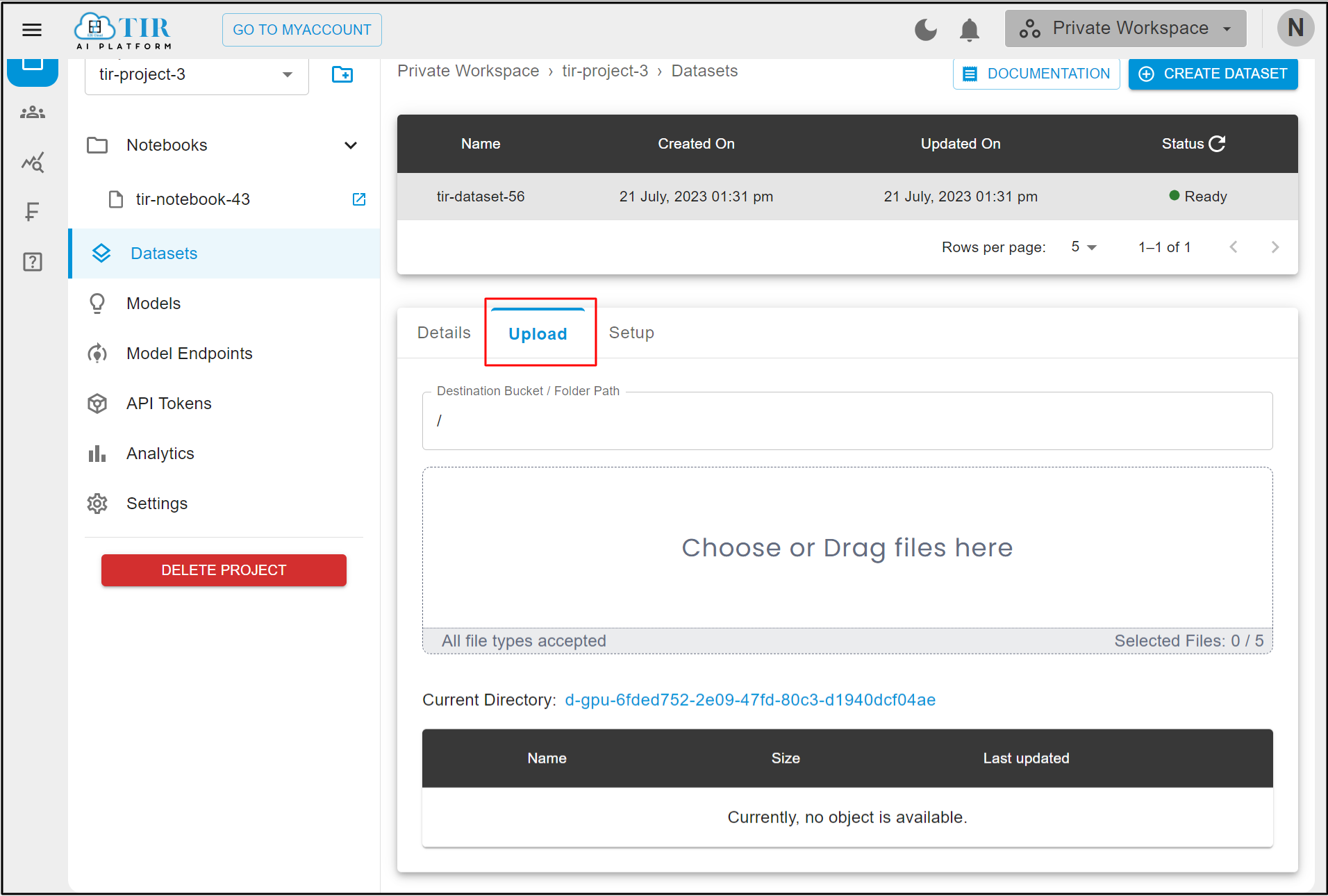

Click on the Upload tab then it will show the Bucket path or upload files text fields.

Click on Setup then it will show Setup Minio CLI & Setup s3cmd.